Token-Based Usage

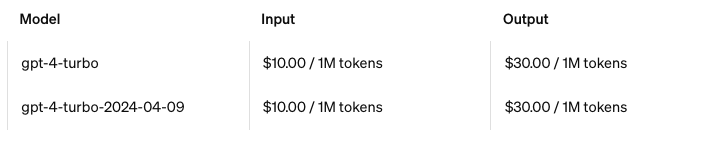

Closed-Source LLMs like OpenAI’s GPT-4 and Google’s Gemini are priced based on tokens used. Tokens refer to the basic unit of text an LLM understands.

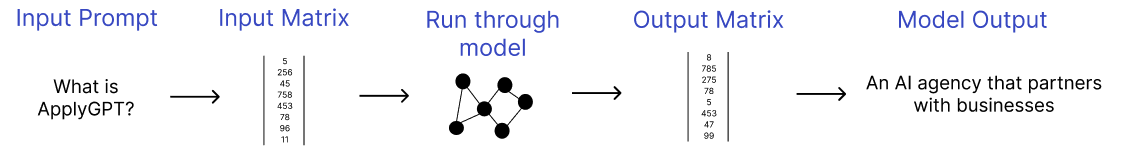

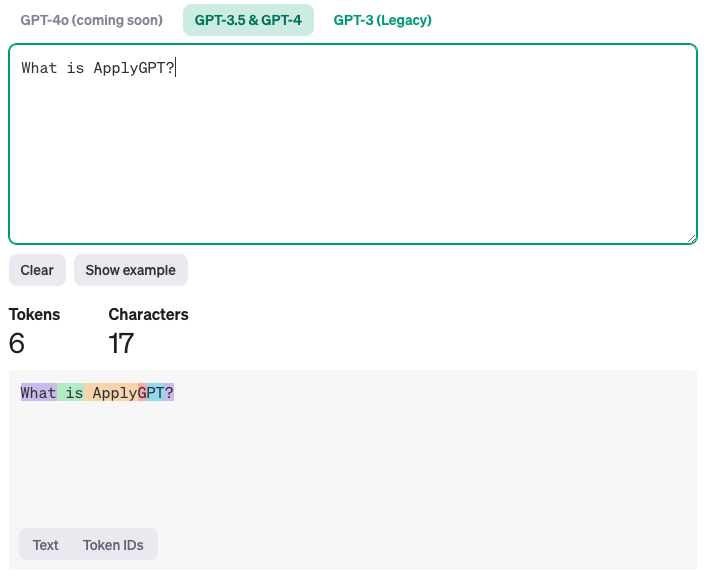

You are charged based on the size of the Input and Output Matrix. The longer your input prompt, the more you are charged. The longer ChatGPT’s output, the more you are charged. To get an accurate sense of how many tokens your use-case is consuming, you can use the OpenAI Tokenizer as a starting point.

Each color in the bottom area represents a new token. For the above sentence, there are 6 input tokens. Input and Output tokens are charged differently, as shown by the diagram below.

This means for every 1 million tokens consumed, you are billed $10. That is ~$0.000001 for each input token.